Hierarchical vs Actual for n_clusters=3 df = iris.targetįig, axes = plt.subplots(1, 2, figsize=(16,8))Īxes.scatter(df, df, c=df)Īxes.scatter(df, df, c=df, cmap=plt.cm.Set1)Īxes. Notice that we can define clusters based on the linkage distance by changing the criterion to distance in the fcluster function! Run the Hierarchical Clustering # Assign cluster labelsĭf = fcluster(distance_matrix, 3, criterion='maxclust')

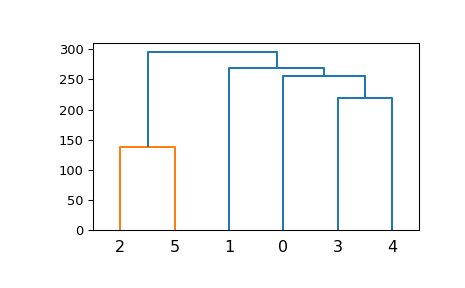

# Import the dendrogram functionįrom import dendrogramįrom the dendrogram we can realize that a good candidate for the number of Clusters is 3 and that 2 clusters are closer (the red ones) compared to the green one.

It is a branching diagram that demonstrates how each cluster is composed by branching out into its child nodes. The interesting thing about the dendrogram is that it can show us the differences in the clusters. How many Clusters – Introduction to dendrogramsĭendrograms help in showing progressions as clusters are merged. These are part of a so called Dendrogram and display the hierarchical clustering (Bock, 2013). # Import the fcluster and linkage functionsįrom import fcluster, linkageĭistance_matrix = linkage(scaled_data, method = 'ward', metric = 'euclidean') The top of the U-link indicates a cluster merge. The dendrogram illustrates how each cluster is composed by drawing a U-shaped link between a non-singleton cluster and its children. Look at the documentation of the `linkage` function to see the available methods and metrics. Plot the hierarchical clustering as a dendrogram. Let’s check if the variance of every feature is close to 1 now: pd.DataFrame(scaled_data).describe()Ĭreat the Distance Matrix based on linkage Also, we can whiten the values which is a process of rescaling data to a standard deviation of 1: There are many different approaches like standardizing or normalizing the values etc. When we apply Cluster Analysis we need to scale our data. If we want to see the names of the target: iris.target_namesĪrray(, dtype=' Let’s see the number of targets that the Iris dataset has and their frequency: np.unique(iris.target,return_counts=True) We will work with the famous Iris Dataset. We have provided an example of K-means clustering and now we will provide an example of Hierarchical Clustering.

0 kommentar(er)

0 kommentar(er)